New Epolitics.com contributor Michael Khoo (of Spitfire Strategies) looks at online activism around Net Neutrality and teases out some valuable lessons about clicktivism…and the ability of Big Data to complicate online campaigners’ lives.

The Sunlight Foundation recently released a study of the one million-plus public comments on net neutrality submitted to the Federal Communications Commission. Thanks to big data and analytics, people can see exactly who’s winning and who’s losing the public debate: 99% of the comments supported net neutrality and 1% opposed it. One percent!

Using analytics, people can also see which groups’ actions generated the most comments. As detailed feedback numbers become more common, nonprofits could be judged by their clicktivism rates. Think of it as big data and open government meet Charity Navigator and Guidestar.

Online Advocacy’s Depth & Breadth

The Sunlight Foundation analysis counters the notion that “clicktivism” is the shallowest form of issue engagement — a concern for progressives who might muster a million signatures only to have them trashed with a keystroke by skeptical Congressional staff.

The report showed that the net neutrality comments were 60% form-generated [Ed note: spat out by an organization’s online advocacy module with pre-packaged text, one comment per consenting activist]. This number is far better than we’d see with many other issues, which might see 75-90% of comments form-generated. But even within the net neutrality form-generated comments, Sunlight found that “many submitters take the opportunity to personalize their comment beyond what was supplied by the campaign’s template language.” Signers weren’t just following like zombies.

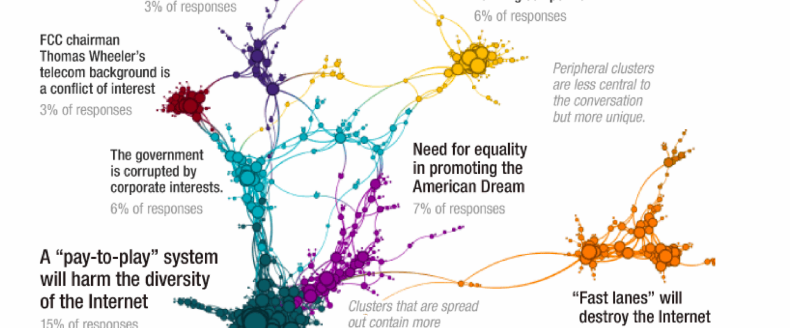

In August, the Knight Foundation and Quid research released a similar study on the net neutrality comments and corresponding media debate. Quid showed that while the media narrative was focused on corporate control and Washington corruption, people were talking about fairness, diversity of public voices and what it means to be American. Public Knowledge’s SVP Harold Feld commented that it showed “people are engaged on this issue to a remarkable degree, and are drawing their own conclusions about it rather than echoing talking points — even talking points from trusted sources.”

WHO DID WHAT

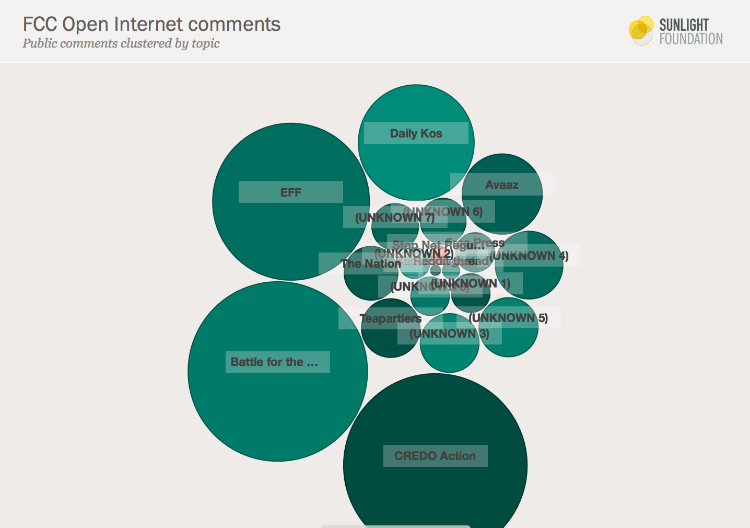

The Sunlight report shows that the number of comments brought to the FCC by the Tea Party pale in comparison to the comments brought by progressive groups. This should make it easier for FCC decision-makers to justify strong action.

But nonprofits should also be concerned how decision-makers, donors or activists might also use data like these to evaluate the efforts of different groups.

These numbers do paint a picture for evaluators, but they only tell part of the story. It’s clear from the data that EFF (Electronic Frontier Foundation) generated more comments than Avaaz, for instance. But what if one group worked on the issue for a week, and the other for a year? Will that information show up in a Charity Navigator rating about the effectiveness of the two groups, or form the lynchpin of a foundation grant decision? What about groups working under a joint branded campaign? Will their individual efforts one day get undervalued by an algorithm using raw data to measure their effectiveness? And what will happen to corporate campaigns, where data is more privately held?

The most dangerous worry regarding big data: how it’s going to be interpreted and used, not the data itself. Just ask the teenaged Target customer whose sales history data led to an ad campaign that then exposed her pregnancy to her father.

This inevitable marriage of big data and activism will come with advantages and disadvantages for digital campaigners and nonprofits. We know clicktivism can represent the kind of deep engagement we need from grassroots activists, which is an important development. Now, nonprofits need to make sure evaluation techniques become equally clear and useful.